Credo is building a set of tools to help organizations leverage AI solutions compliantly, ethically, and responsibly. In this article, Co-founder Navrina Singh discusses the strengths and risks of using AI in hiring applications, current approaches and challenges to leveraging AI, and how they are building a platform to enable the whole ecosystem to operate compliantly.

Artificial Intelligence – A Powerful Tool with Potentially Unintended Consequences

Artificial Intelligence has changed nearly every aspect of our lives, including how enterprises source, screen, and hire candidates. With the emergence of more Automated Employment Decision Tools (AEDT), companies are using AI to streamline the recruitment process, optimize internal mobility, personalize the candidate experience and even mitigate human biases. But as the adoption of AI-hiring tools increases, so does the potential for unintended consequences.

Important Statistics About Using AI in HR from SHRM:

- Nearly 1 in 4 organizations report using automation or artificial intelligence (AI) to support HR-related activities, including recruitment and hiring.

- Just 16% of employers with fewer than 100 workers use automation or AI compared to 42% with 5,000 or more workers.

- While 30% say the use of automation or AI improves their ability to reduce potential bias in hiring decisions, 46% would like to see more information or resources on how to identify any potential bias when using these tools.

- 85 of employers that use automation or AI say it saves them time and/or increases their efficiency.

- 64% of HR professionals say their organization’s automation or AI tools automatically filter out unqualified applicants.

AI, in the simplest terms, is incredibly effective and powerful in pattern recognition. We train AI systems to recognize patterns in data and then use that pattern recognition to help us analyze new data. For example, we might train an AI tool to detect whether a candidate’s resume indicates they are a good fit for a job based on a large set of resumes from candidates who were hired in the past for that role or simply extract information from the resume to build a candidate profile in an automated manner.

However, the problem with data and pattern matching is that we may unintentionally train our AI system to detect patterns that are not actually helpful to the decision or prediction we’re trying to make. For example, suppose most of the resumes we use to train our candidate matching system are from men. In that case, we may unintentionally train the system to be harmfully biased against women—even though gender has nothing to do with the job.

Hear from @CredoAI’s Co-Founder Navrina Singh as she analyzes the impact of artificial intelligence on HR organizations in the latest from @TalentTechLabs: Click To TweetThe risk of unintended harmful bias is just one of many different risks associated with AI systems. Data privacy, security, robustness, and explainability, are some of the other challenges that AI systems can face. Effectively managing these risks is critical to effectively using AI. Still, many HR organizations aren’t fully aware of every AI tool they may already be using, let alone equipped with the tools and skills to effectively manage and monitor them. In addition, these unintended consequences are further exacerbated if third-party AI vendor systems are used.

Currently, no federal laws or regulations require employers to disclose their use of AI hiring tools. However, more governing bodies are taking steps to ensure AI systems are designed and deployed responsibly. It is important to note that enterprises do have existing responsibilities to prevent disparate impact under the Title VII of Civil Rights and U.S. Equal Employment Opportunity Commission (EEOC) guidelines.

Regulations in work, like the EU AI Act, provide oversight for high-risk AI systems, including recruitment. New York City is one of the first jurisdictions to require any automated employment decision tools (AEDT) used on NYC-based candidates or employees to undergo annual independent bias audits. On January 1, 2023, Local Law No. 144 will go into effect. Other states like California and Oregon are following suit, with more on the way.

Get a full preview of the Trends Report here!

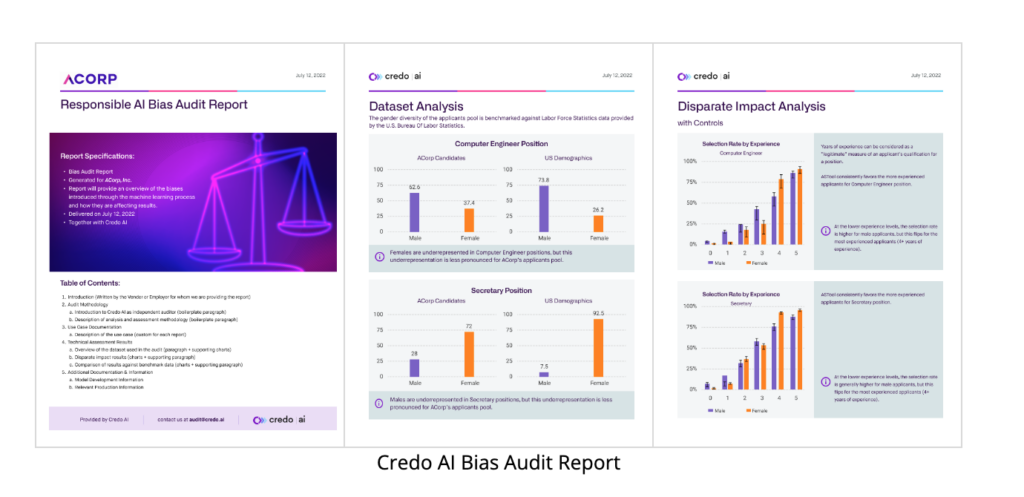

Proper tools to monitor, assess and report on the performance of AI systems are also critical to putting guidelines, frameworks and legislation into action. One of the challenges with recent legislation, like NYC’s Local Law 144, is the lack of guidance on what a good bias audit looks like, making it difficult for organizations to conduct these assessments at scale.

The law only specifies the high-level requirements around disparate impact assessment across gender, race, and ethnicity and lacks coverage for other protected categories, including disabilities, age, and sexual preference. In addition to measuring disparate impact, Credo AI believes these audits should also include information about how an AI system was developed, including specific design decisions relevant to the fairness of the system, information about the source and composition of the training data, and any bias mitigation techniques used by the development team.

This additional information will provide valuable context for the technical disparate impact assessment results, which ultimately will help employers—and their employees and candidates—better understand how the AI system works and its potential limitations.

Navrina Singh from @CredoAI explains companies are using #AI to streamline the #recruitment process. See more in-depth analysis from @TalentTechLabs’ blog: Click To TweetApproaches to Leveraging AI Responsibly

Responsible AI governance for HR and recruitment requires coordination among vendors, employers and candidates impacted. In the case of NYC Local Law 144, employers are responsible for ensuring that every tool (built in-house or procured from a third party) has gone through a bias audit by an independent auditor and that the results are made public.

Employers first need to identify whether their organization uses AEDT for recruiting or HR and if so, conduct an internal analysis to establish a baseline for algorithmic fairness and disparate impact. They then need to engage an independent body to conduct an official audit, which should continue annually for the lifetime use of the tool. Employers must also provide notifications and disclosures to candidates about using these tools, along with an alternative selection process.

HR vendors should be incentivized to help their customers better understand and trust these tools. This includes providing documentation regularly about how they developed their AI, information on what data was used, and analysis of the disparate impact and unintended harmful bias. They can also help them understand how to mitigate any detected bias and contextualize their bias audit results.

An Operating System for Responsibly Leveraging AI-based Solutions

Credo AI has been working for years to ensure that AI is always in service to humanity and that an increasingly AI-embedded society will have more equitable access to resources, including employment.

Credo AI provides assessments and governance tools to enterprises, including employers, employment agencies, and HR vendors, to ensure compliant, fair, transparent, and auditable development and use of AI. The Credo AI Responsible AI PlatformTM is designed to operationalize enterprise ethical values and current standards that apply to AI systems, along with the rules, regulations, and guidelines that are emerging around evaluating AI systems across key dimensions of responsibility.

Credo AI also conducts comprehensive bias audits of AI-driven HR tools for your organization, whether you are an employer leveraging AI-based HR systems on candidates and employees or an HR vendor selling AI-based tools to customers. The Credo AI Responsible AI Platform supports stakeholders in conducting assessments of their technical systems (datasets and models) and organizational processes. The results of these assessments are actionable and insightful reports, including:

- Algorithmic impact assessments

- Transparency reports and disclosures

- Bias audits and reports

- Conformity assessments

- General AI risk assessments and AI risk reports

For HR vendors, Credo AI can help generate generic or customized audit reports to help address customers’ questions and concerns related to regulations like New York City Local Law 144. Credo AI can also help you understand your responsibilities to your customers in the context of the law and generate reports and other artifacts that will help you build trust with the market.

For companies farther along in their RAI journey, Credo AI’s Responsible AI Governance platform provides a set of tools to help implement, standardize, and streamline AI governance processes across your entire organization. Credo AI can also track and manage AI risk and compliance and ensure that all of your internal and third-party AI/ML systems meet business, ethical, and regulatory requirements at every development and deployment stage.

A robust Responsible AI auditing and assurance ecosystem is critical to an inclusive world where technology can benefit everyone. Early AI regulation is a strong step in the right direction but requires further guidance and clarification so organizations can truly put principles into practice. We believe that by building the guardrails required to leverage AI ethically and effectively, we will empower the next generation of talent leaders, HR vendors, and candidates through responsible AI-driven solutions.

About Navrina Singh

Navrina Singh is the Founder and CEO of Credo AI, a Governance SaaS platform empowering enterprises to deliver Responsible AI. A technology leader with over 18+ years of experience in Enterprise SaaS, AI, and Mobile, Navrina has held multiple product and business leadership roles at Microsoft and Qualcomm. Navrina is a member of the U.S. Department of Commerce National Artificial Intelligence Advisory Committee (NAIAC), which advises the President and the National AI Initiative Office. Navrina is an executive board member of Mozilla Foundation. Navrina is also a young global leader with the World economic forum & was on their future council for AI guiding policies & regulations in responsible AI. Navrina holds a Master’s in Electrical and Computer Engineering from the University of Wisconsin-Madison, an MBA from the University of Southern California Marshall School of Business, and a Bachelor’s in Electronics and Telecommunication Engineering from the College of Engineering, India.

About Credo AI

Founded in 2020, Credo AI’s mission is to empower organizations to create AI with the highest ethical standards and enable them to deliver Responsible AI (RAI) at scale. Credo AI’s platform provides Responsible AI assessments and governance tools to ensure compliant, fair, transparent, and auditable development and use of AI, regardless of where a company is on its RAI journey. Current Credo AI customers include Global 2000 enterprise companies across HR.

Get even more insights into the current legal landscape regarding using artificial intelligence in HR recruitment by downloading our newest Trends Report: The Impact Of AI Regulations On HR Technology And Employers. Our trends report explores AI’s ethical and explainable use across a wide range of HR technology vendors, helping you thrive in an AI-regulated world.

Download the latest Trends Report here!